Fast-Inf: Ultra-Fast Embedded Intelligence on the Batteryless Edge

Jul 1, 2024·, ,,,·

0 min read

,,,·

0 min read

Leonardo Lucio Custode

Pietro Farina

Eren Yildiz

Renan Beran Kılıç

Kasim Sinan Yildirim

Giovanni Iacca

Image credit: Unsplash

Image credit: UnsplashAbstract

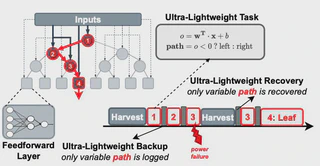

Batteryless edge devices are extremely resource-constrained compared to traditional mobile platforms. Existing tiny deep neural network (DNN) inference solutions are problematic due to their slow and resource-intensive nature, rendering them unsuitable for batteryless edge devices. To address this problem, we propose a new approach to embedded intelligence, called Fast-Inf, which achieves extremely lightweight computation and minimal latency. Fast-Inf uses binary tree-based neural networks that are ultra-fast and energy-efficient due to their logarithmic time complexity. Additionally, Fast-Inf models can skip the leaf nodes when necessary, further minimizing latency without requiring any modifications to the model or retraining. Moreover, Fast-Inf models have significantly lower backup and runtime memory overhead. Our experiments on an MSP430FR5994 platform showed that Fast-Inf can achieve ultra-fast and energy-efficient inference (up to 700x speedup and reduced energy) compared to a conventional DNN.

Type

Publication

The 22nd ACM Conference on Embedded Networked Sensor Systems